Part 1: Host Triage Tool Buyer’s Guide

I often encounter companies who are starting to think more formally about incident response and how to properly deal with incidents. To help them with that process, I wanted to create a series of blog posts about responding to an endpoint and what to look for.

This is the first article in that series and it focuses on tools and things to consider when picking them. There are lots of tools out there, so I wanted to give some criteria that you should think about when evaluating them. At the end, we’ll look at a handful of tools and evaluate them against the criteria.

Host Triage

To start the series, let’s be clear on what I mean by host triage. I use that term to refer to the analysis of an endpoint or server to answer some specific investigative questions. Namely:

- Is this computer compromised?

- If so, how badly and have I seen it before?

You will often be asking these questions in response to a SIEM alert or some other indicator of strange behavior.

Host triage does not answer all investigative questions, but it covers what companies care about most. Many of them will use these triage questions to either wipe the system or call in outside experts.

To answer the triage questions, you’re going to need to collect data from the remote endpoint and analyze it. That sounds easy, but there are a lot of different ways to do it. Some companies are constantly collecting from the endpoint and others do it only after an alert. Some tools require lots of manual analysis to interpret the data and others do some of the work for you.

In the rest of this article, we are going to talk about considerations about picking the collection and analysis tools. In future articles, we’ll focus on the specific data that we are collecting and what to look for during the analysis.

My evaluation criteria break into three dimensions:

- Evolution based on new threats

- Based on your security team

- Based on your IT environment

Let’s go over these criteria and look at some examples.

Evolution Based on Threats

Attackers are constantly changing their techniques and looking for different ways of getting in, staying in, and hiding. So, your triage techniques must evolve too.

Consider how your tools support this. If they are largely manual, then you may be responsible for bringing in new tools and techniques. If the tools are automated, then find out how they stay up to date and if you can customize them if you need to look for something that it doesn’t know about.

Security Team Considerations

Let’s first talk about your team. There is no point buying tools your team doesn’t have the experience or resources to use. As a physical world analogy, your office probably has some basic first aid kit supplies, but isn’t stocking scalpels, syringes, and other things that only EMTs, nurses, and doctors should be using. That’s because you’re going to call an ambulance if anything really bad happens.

Your cyber incident response capability should be similar. Have tools that your team can use and don’t bother with things that your outside help or expert teams will bring in.

Collaboration

If you have more than one person on your IR team, then it is important for your software to support collaboration. This prevents information and knowledge from being in only one person’s head.

Some host triage tools will produce only reports and it is up to you to distribute the results among your team. Other tools store the data in a central place so that multiple users can access. Others allow for real-time collaboration.

But, tools that provide collaboration will likely require more hardware resources to operate since they will probably require a server or other appliance. If you have only a single person team, consider looking at a solution that can scale and grow with your team.

Automation

When you take family pictures, do you manually choose the shutter speed or do you let the camera decide? The average person takes better pictures when the camera makes some of the decisions, but an expert photographer will can use the manual settings to make amazing photos.

Incident response tools are similar. An IR expert can do amazing things with manual investigation tools, but your average company won’t have an IR expert and should instead get help from automation.

An automated solution will perform a series of steps for you and will point out suspicious things that should be investigated further. 100% automation is not possible, but the tools can certainly help. This makes you faster and more efficient. Even if your team is experienced, automation helps them to make fewer mistakes and helps train new junior responders.

Investigation Depth

Incident response-based investigations tend to have two levels of depth:

- Triage: Do I care about this?

- Deep Device: Root cause analysis with questions about who, what, when, and where.

Know how deep your team is expected to go. Many companies will hire outside consultants to do the deep dive, in which case you need to focus only on triage. Other companies want to be able to handle everything.

Either approach can work, but make sure your tool choice is consistent. Just like how your company’s first aid kit doesn’t have scalpels, your incident response kit may not need deep dive features.

Integration

If you have a SIEM or ticketing system, consider if it is important that it integrates with your triage tools. Possible integrations include:

- Automatically start collection (or a more in-depth collection if you have continuous collection) based on an alert or ticket.

- Feed the analysis results back into the SIEM or ticketing system so that other tools can leverage them.

Typically, the more the automated investigation solutions will offer integration options.

Preservation

It is often important for you to save the data that you collect during the triage.

- For regulated industries, preserving the data can help in the case of audit if there were questions about the thoroughness of the investigation.

- Future incidents could be related, so having as much information about previous incidents can help provide context.

- This incident could be the tip of an iceberg and result in a large investigation with legal impacts. Having preserved the initial evidence could be important if the bad guy starts to clean up and delete evidence.

Some tools are only a graphical interface that runs on the target computer and do not produce preservable results. We recommend having a process that allows you to preserve the results, which may mean that you need to do extra steps to copy the results if the tools don’t do it for you.

IT Endpoint Considerations

The previous section was about tool buying considerations based on your security team. Now, let’s talk about considerations based on your IT infrastructure. I separate these because, in many companies, the security team does not have direct control over the endpoints. So, make sure your incident response tools are consistent with the culture of your organization.

Administrator Access

The data needed to triage a host typically requires administrative-level access. So, your options are either:

- Have a software agent that is always running on the host as admin.

- Run one or more collection tools that will require an administrator password to run.

Some companies don’t want to install agents because they can introduce security risks or there are concerns about impacting endpoint stability.

If the security team does not have administrator-level access to hosts and there are not agents running, then make sure that your collection solution is easy to use by the local IT person who will likely need to do the collection. In my experience, this means:

- Easy to email or send to the local IT person

- Easy for them to run

- Easy to get data back to the security team either via file sharing or by automatically sending results over the network.

Remote Connectivity

If you have remote offices, then also take into account what connectivity you have between the security team computers and the remote hosts. Perhaps there are firewalls in the way that will prevent direct connections or maybe the network connections are not fast enough for large data transfers.

If connectivity is a challenge, consider asynchronous approaches whereby a local IT or security team member can collect data and then upload it via file share to a place that the main office can copy it from. While not as fast as a system that immediately sends data back to be analyzed, a file share approach can be more reliable.

Examples

Let’s look at some example WIndows-based tools to make these ideas more concrete. This is by no means a comprehensive list, but instead are some that highlight some of these concepts.

If you want a list of tools, check out Meir Wahnon’s “Awesome Incident Response” github page.

SysInternals Command Line Tools

One of the classic incident response tools are Sysinternals command line utilities from Microsoft. These tools provide many pieces of information that can be useful during a response. A typical use of these tools is to run 5 or 6 (or more) of them from a USB drive or network share, save the results to text files, and then manually review them for suspicious data.

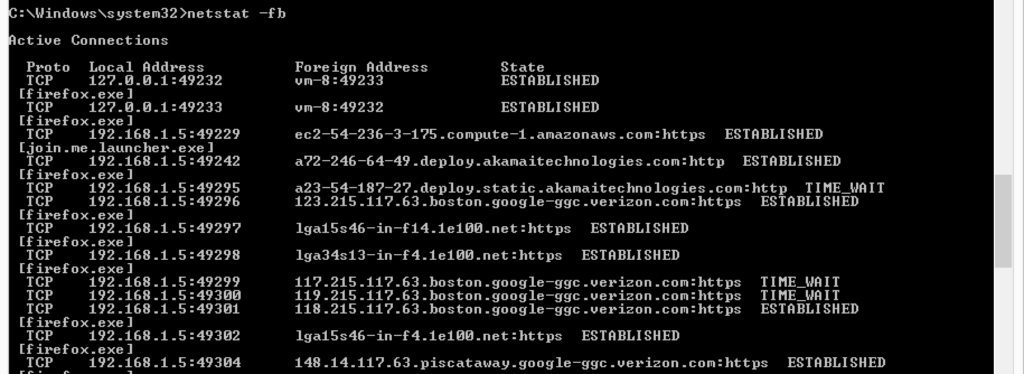

Here is an example of running the ‘netstat’ tool to look at active connections:

Let’s review these tools based on some of the criteria from above.

| Automated | No. These tools are not automated, though they can be foundation of some other automated solutions. If you directly run them, you need to remember command line arguments. |

| Updates | Because it is not automated, it is up to you to know which tools to run and what to look for. |

| Collaboration | No. These tools produce text file outputs and need to be fed into another system to support collaboration. |

| Depth | Triage. These tools do not go deep enough to allow for a root cause analysis of an endpoint. |

| Integration | No. Additional automation is needed to integrate these tools. |

| Preservation | Yes. The output of these tools can be preserved for future reference, but you need to develop an archival system. |

| Administrator Access | Run Time. These tools will require you to have administrator access when you run them to collect all data. |

| Remote Connectivity | Because you will often need to copy several of the SysInternals tools to the endpoint, these tools are difficult to remotely run. But, if you do run them then they can work well in remote places because they copy small amounts of data. |

SysInternals Graphical Interface Tools

Microsoft offers some of their SysInternals features as graphical interfaces. These were built for IT administrators, but can also be used for incident response. Though, they have their limitations. Namely that they don’t allow you to preserve all of the results and require you to manually interact with the remote system for the analysis (and a full investigation will require multiple tools).

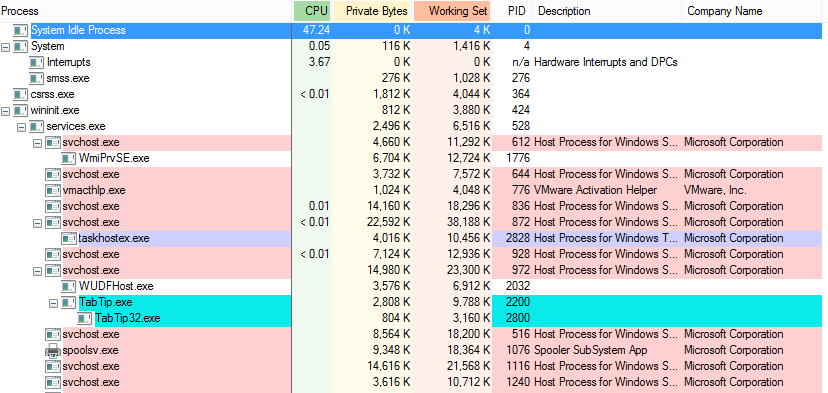

Here is an example of running Process Explorer to look at active processes.

| Automated | No. You’ll need to run several tools and need to interpret many of the results. Some levels of automation exist from uploading files to VirusTotal and verifying signatures. |

| Updates | Because it is not automated, it is up to you to know which tools to run and what to look for. |

| Collaboration | No. The primary output of these tools is a graphical interface, so it is not easy to share. |

| Depth | Triage. These tools do not go deep enough to allow for a root cause analysis of an endpoint. Similar to the command line tools. |

| Integration | None and it would be hard to automate so that they could be integrated. |

| Preservation | Partial. Some of the data displayed by these graphical tools can be saved to text files. But you you need to develop an archival system. |

| Administrator Access | Run Time. If you want to collect all information, you will need administrator access. If they are run without administrator credentials, they will show a smaller amount of data. |

| Remote Connectivity | Not really. These tools require user interaction and therefore remote connectivity would consist of making a remote desktop connection to the target system. |

Cyber Triage

Our Cyber Triage tool is agentless and automates the collection and analysis of data. The collection tool can be remotely deployed and the results are sent back to a central database for analysis. The software analyzes the collected data for malware and suspicious data.

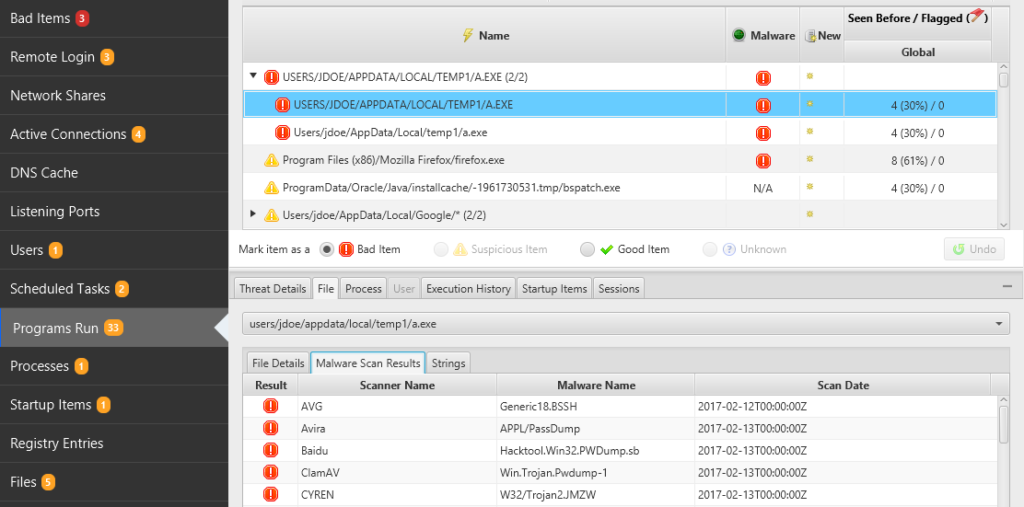

Here is a screen shot of Cyber Triage showing programs that were run on the system (from various registry keys and files) and flags certain files based on malware and heuristics.

| Automated | Yes. Both collection and analysis are. |

| Updates | Malware scanning uses several engines that are updated (via OPSWAT) and heuristics are updated with each release. |

| Collaboration | Yes. The Team version allows multiple users to access the same data and see results. Users can also see how common or rare data is based on previous investigations. |

| Depth | Triage. This tool focuses on Triage, but the data can be integrated with our Autopsy forensics tool for a deep dive analysis. |

| Integration | Yes. The Team version has a REST API that can be called by SIEMS and ticketing systems. |

| Preservation | Yes. The data is stored in a central database. |

| Administrator Access | Run Time. These tools will require you to have administrator access when you run them to collect data. Local IT administrators can run the tools from a USB drive and send data back to the security team. |

| Remote Connectivity | The collection tool can be either sent to the remote host over the network (via PsExec) or can be run from a USB drive. The results can either be sent over the network or saved back to the USB. It was designed to work in a variety of environments. |

Live Response Collection

The Live Response Collection from BriMor Labs automates the collection of data. On a Windows system, they wrap the previously described SysInternals command line tools (and other tools) to provide a more automated collection experience. They do not offer additional analytics on top of the collection though.

| Automated | Partially. Collection is automated, but analysis is not. |

| Updates | Additional collection tools can be added with each release, but you will need to know what to look for. |

| Collaboration | No. These tools produce text file outputs and need to be fed into another system to support collaboration. |

| Depth | Triage. These tools do not typically go deep enough to allow for a root cause analysis of an endpoint. |

| Integration | No. Additional automation is needed to integrate these tools. |

| Preservation | Yes. The output of these tools can be preserved for future reference. |

| Administrator Access | Run Time. These tools will require you to have administrator access when you run them to collect data. |

| Remote Connectivity | This script runs a variety of command line tools and therefore it is actually a collection of tools. Because of this, these tools are difficult to remotely copy all of the files and then run. But, if you do run them then they can work well in remote places because they copy small amounts of data. |

Kansa

There are several Powershell-based IR tools out there. Let’s look at one of them, Kansa. It is a framework that takes advantage of the Windows Powershell infrastructure to copy around scripts and collect data. It’s main focus is on automating the collection of data and also provides some analytics on the data to help with frequency analysis.

| Automated | Partial. Collection is automated, analysis has some automation. |

| Updates | New plug-in modules can be contributed to keep up to date. But, you will need to know what to look for. |

| Collaboration | No.The tools produce output that must be added to another system to enable collaboration. |

| Depth | Triage. The existing modules focus on triage, but Powershell could be used to do a deep dive analysis as well. |

| Integration | No. Additional automation is needed to integrate these tools. |

| Preservation | Yes. The output of these tools can be preserved for future reference. |

| Administrator Access | Run Time. These tools will require you to have administrator access when you run them to collect data. |

| Remote Connectivity | The framework requires that Powershell be running in your environment so that the scripts can be copied to remote systems. |

Volatility

There are several tools that focus just on memory forensics, so let’s talk about the most popular one, Volatility. Volatility is an analysis tool, which means that you need to use another tool to collect memory. But, many of the memory collection tools are fairly automated and require only a single command. Volatility allows the user to perform a lot of techniques to view processes and data in memory.

| Automated | Partial. Some of the plug-ins will simply display data and others will highlight suspicous content. |

| Updates | There is an active community making plug-in modules, but you need to know which to install and what to look for. |

| Collaboration | No. The tools produce output that must be added to another system to enable collaboration. |

| Depth | Triage and Deep Dive. A subset of the modules can be used for a triage investigation, but others help answer other questions. |

| Integration | No. Additional automation is needed to integrate these tools. |

| Preservation | Yes. The output of these tools can be preserved for future reference and you have a memory image that can be used as evidence. |

| Administrator Access | None. The collection tools will require administrator access, but Volatility needs access to only the memory image. |

| Remote Connectivity | None. Volatility is not responsible for getting the memory image from the remote system to the analysis system. |

General EDR

Endpoint Detection and Response (EDR) tools come in many forms and because they have “response” in their names I wanted to include them here. EDRs come in many forms and are rapidly changing so I wanted to highlight general EDR concepts in these previously described criteria.

| Automated | Depends. Some of the tools have automated collection and analysis and others offer remote access to the system and you need to do manual analysis. |

| Updates | Depends on the automation of the solution. |

| Collaboration | Often. Many of the tools store results in a central location, which makes it easier to collaborate. But, not all tools do and not all offer methods for teammates to work together. |

| Depth | Depends. Some EDR tools are higher-level and collect the data needed for their detection, but do not go lower. Other tools allow the user to do a deep dive investigation. |

| Integration | Often. Many of these tools can integrate with other systems. |

| Preservation | Often. Many of these tools save data so that you can later retrieve it. |

| Administrator Access | Agents. Many of these tools are agent-based and therefore require administrator access when installing the agent. |

| Remote Connectivity | Yes. EDR tools have been designed to run on the remote target system. Not all EDR tools allow the user to interact with the remote endpoint though during an investigation. |

Conclusion

When building an incident response capability, you need to take the needs of the IT and security teams into consideration. Many of the tools out there are fairly manual and are used by power users. If your company needs a basic host triage capability, then make sure it has the right levels of automation that you need. Are there other criteria that you look at? Let us know.

In future posts in this series, we’ll cover how to analyze the data collected by these tools. In the meantime, if you want to try our Cyber Triage tool, then get a free evaluation copy here.